From Pilot to Platform - Aladdin Copilot

Pedro Vicente-Valdez

Brennan Rosales

Summary

The discussion revolves around the development and deployment of BlackRock's Aladdin Copilot. Here's a structured summary:

- Aladdin Copilot Overview:

- The system is described as a framework using LangChain for its orchestration graph.

- It involves "system parts," likely key components or modules, and a "Plugin Registry" for engineering teams to integrate their domain-specific functionalities as tools or custom agents.

- Rolling Out Agents:

- The framework is used to deploy agents across various user groups and embedded across numerous Aladdin front-end applications.

- Evaluation and Testing:

- The system undergoes extensive evaluation, including testing every intended behavior in system prompts and end-to-end tests daily to ensure correct routing and performance.

- This emphasizes test-driven development and continuous improvement.

- Ground Truth Data:

- Relies on ground truth data for training and testing, ensuring the system's effectiveness in practical financial scenarios.

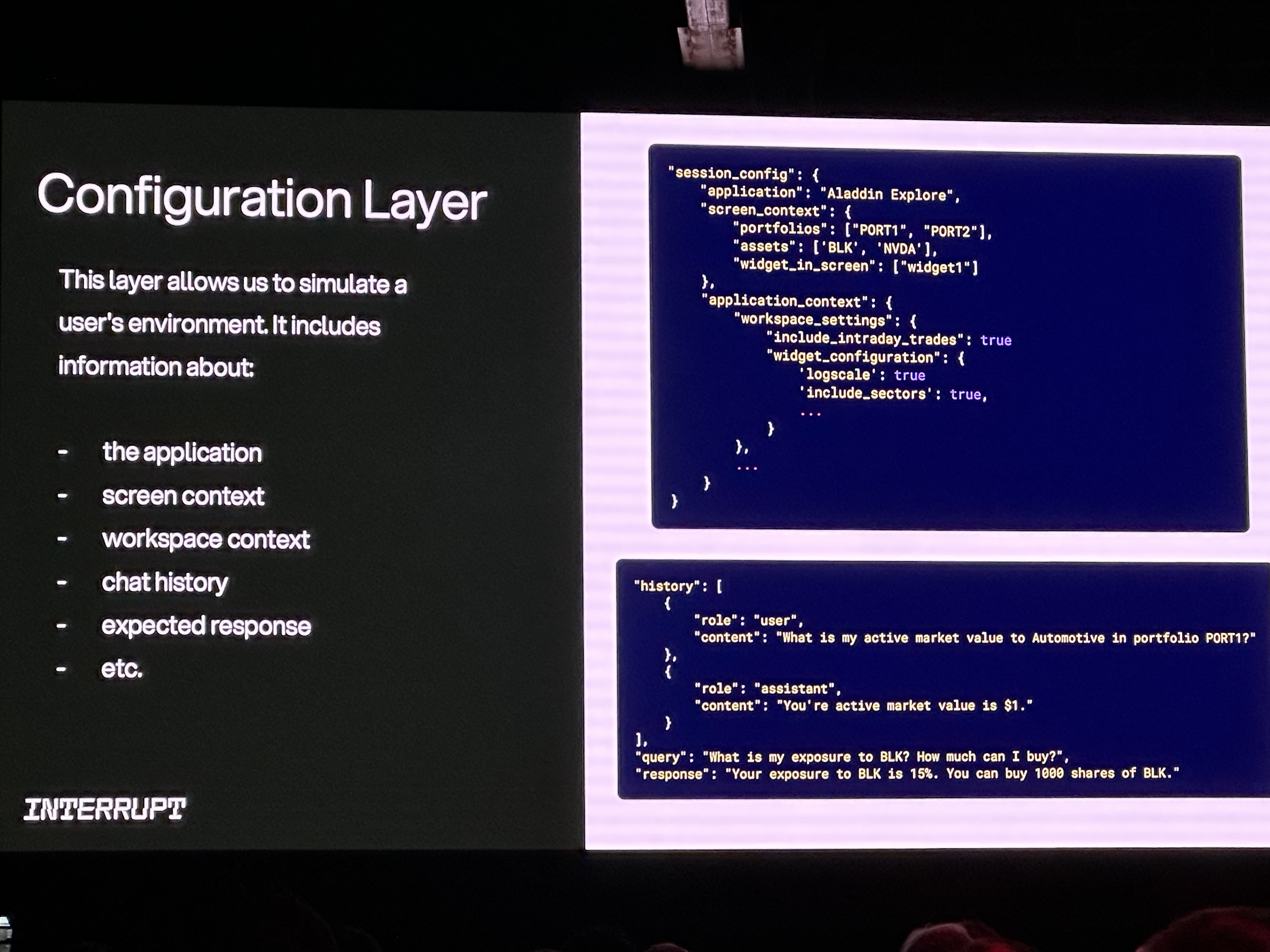

- User Configuration Layer for Testing:

- Allows developers to configure detailed testing scenarios, including application context, user screen context (portfolios, assets, widgets), and multi-turn interactions.

- AI Strategy:

- BlackRock's AI strategy focuses on increasing productivity, driving alpha generation, and personalizing user experience through Aladdin Copilot.

- Robustness and reliability are ensured through continuous evaluation integrated into CI/CD pipelines.

In essence, BlackRock is leveraging LangChain and a sophisticated evaluation framework to build and deploy the scalable and reliable Aladdin Copilot system, enhancing their investment management platform.

Auto-Highlights

Auto-Highlights: Highlight: Aladdin Copilot, Count: 3, Rank: 0.06 Highlight: Aladdin Kolkata, Count: 1, Rank: 0.06 Highlight: system parts, Count: 1, Rank: 0.05 Highlight: autonomous agents, Count: 1, Rank: 0.05 Highlight: user groups, Count: 1, Rank: 0.05 Highlight: principal AI engineer, Count: 1, Rank: 0.04 Highlight: query, Count: 10, Rank: 0.04 Highlight: development environment, Count: 1, Rank: 0.04 Highlight: Aladdin Copilot application, Count: 1, Rank: 0.04 Highlight: test driven development, Count: 1, Rank: 0.04 Highlight: Aladdin platform, Count: 2, Rank: 0.04 Highlight: investment advice, Count: 3, Rank: 0.04 Highlight: trading data, Count: 1, Rank: 0.04 Highlight: applications, Count: 3, Rank: 0.04 Highlight: colleague Pedro Vicente Valdez, Count: 1, Rank: 0.04

Photos

Transcript

Good afternoon everyone. My name is Brennan Rosales and I am an AI engineering lead at BlackRock. I'm joined today with by my friend and colleague Pedro Vicente Valdez, principal AI engineer. We're extremely excited to be here today to share with you how BlackRock is leveraging AI to really transform the investment architecture supporting so let's start out with just a quick primer on what is blackrock and what is Aladdin. Blackrock is one of the world's leading asset managers with over $11 trillion in AUM. And ultimately our main goal is to help people experience financial well being. And the Aladdin platform is key to our ability to be able to deliver on that goal. Aladdin is a proprietary technology platform that unifies the investment management process. It provides asset managers with a whole portfolio solution that gives them access to both public and private markets and allows them to cover the needs of both institutional and retail investors. This system is built by the industry for the industry. BlackRock is the biggest user of Aladdin, but we also sell and support hundreds of clients globally across 70 countries, all using the Aladdin platform. The Aladdin organization is made up of around 7,000 people with over 4,000 of those being engineers. And these engineers build and maintain the around 100 Aladdin front end applications that thousands of people use every day. Now how do we think about approaching AI and how do we see that actually affecting or changing the way that people experience Aladdin today? We see kind of three key outcomes at kind of the BlackRock firm level. We would really like to increase everyone's productivity. We would love for AI to drive alpha generation and really give people a much more personalized experience with the platform. And one way we're realizing this vision is through the Aladdin Copilot initiative. The Aladdin Copilot application is currently embedded across all of those hundred front end applications that I referenced earlier. And this copilot acts as the connective tissue across platform, proactively surfacing relevant content for you at the right moment and fostering productivity at each step along the way. We like to think about kind of three core value drivers within the scope of Aladdin Copilot. The first is that we want every user using the Aladdin software to become an Aladdin expert. And we can do that by enabling a much more intuitive user experience. The second is really has to do with increasing the personalization and customization of this platform. And through that our users will be able to unlock new efficiencies through highly configurable experiences. And the third one is really Just to democratize access to all of these insights. If our users, it is much easier for our users to find the data, the data points that they need, then they will at the end of the day be much more enabled to make a lot better decisions. So here is a high level overview of the actual architecture supporting Aladdin Kolkata. Today I'll take you through kind of the life cycle of a user query. But before I dive into that, I want to point your attention to the top right here and it's component we call Plugin Registry. I referenced this earlier, but there are, let's say 50, 60 Aladdin engineering teams that cover specific domains throughout the platform. You can think of an example of one of these teams, maybe the trading team and they own all the services that serve trading data, do all a lot of efficiencies. Pedro and I are not experts in these business financial domains. Our job is to make it as easy as possible for these engineers to plug their functionality that they own into this system. And we do that through this plugin registry. Today we allow these development teams two ways to onboard. The first, we come to define a tool as kind of mapping one to one to an existing Aladdin API already out in production. Kind of the second onboarding path that we give to the development teams for very complex workflows is the ability to spin up a custom agent and also plug that into the system. I think one important call out here, we kind of started designing this thing two, two and a half years ago and back then we needed to figure out kind of a standardized agent communication protocol. We came up with a very slim, very specific version of that for Aladdin. But as new, more established protocols like Langcheng's agent protocol and a TOA come out, we're kind of actively evaluating those solutions. So now I can point your attention to the left side of the screen, I'm out of the way. So we're going to start with the query what is my exposure to Aerospace in Portfolio 1 at this point in time? When the user submits their query, we have a lot of very useful and relevant context. Some of those could be which Aladdin allocation they're asking this question from what they actually have up on the screen, what portfolios, what assets, what widgets are they actually looking at at that point in time? And in addition to that, there's also kind of this set of predefined global settings throughout these Aladdin apps. And ideally we are respecting those preferences as we make all these calls on behalf of the user. So once a query gets Submitted, we enter our orchestration graph from day one. It's been built using LangChain. So the first node in that graph is an input guardrail node and it covers a lot of the needs for our responsible AI moderation. At this point in the graph, we're trying to find off topic and toxic content and deal with that appropriately. We would also at this point in time like to identify all of the relevant PII and make sure that we're handling that as carefully as possible. So once we get to that node, we go into this filtering and access control node and that's the point in time where we have this, you know, set of agents and tools and agents and tools that are registered in the plugin registry. When teams onboard this functionality to the registry, they have complete control over which environment environment this plugin is enabled, in, which user groups have access to this and even which Latin apps can even access these plugins within this node. We also, it's important for us to try our best to decrease the kind of searchable universe of all these plugins because once we get to our planning step, you know, we're sending more than 40, 50 tools, we probably won't have that good of a performance. So we leave that filtering and access control node with some set of say 20 to 30, 30 tools and agents and we enter our orchestration node there. We're very reliant on GPT4 function calling. Today we essentially just iterate over a planning and action node. We go through that as many times as possible and at some point, you know, GPT4 says, hey, you got the answer, you'll get back to the user or you know, I'm unable to find this answer. So we get that final answer, you pass back out through our output guardrail nodes, try to detect hallucinations as best as we can and then we end up on the left side and tell the users that their exposure to the aerospace and sector in this portfolio is 5%. So that's a bit of high level information on the architecture of the system. I'm going to pass it to Pedro here to talk about how we think about evaluating the system. Speaker B: Yeah, so I think like one thing is obvious from the presentations that we've been seeing, like we're all using supervisor. I think, like I see very little agent to agent autonomous conversations. And we are just one more of those presentations using supervisor. Why? Because it's very easy to build, it's very easy to release, very easy to test. So hopefully next year we are interviewing autonomous agents. But how do we evaluate the system that we just presented. So Brendan walked you through the lifecycle of query. There's many places where you can make a wrong decision. It can be in the initial moderation, the car drills, the individual components of the car drills on the filtering. Did we not reduce the universe correctly that we did the orchestration to the wrong turn at some point and all the way back to the illumination blocks. Speaker A: On tin that was not actually hallucinations? Speaker B: So so much, so much. So many systems in place. So how exactly do we gain confidence this this alignment of others deployed and all our clients. So this is actually production orchestration, like Brendan said. So this is just an example of a language trace just for one single tool call. Right. Like we go to input guardrails, filtering, planning the actual pool calling, communicating with the other agents, coming back, planning. Let's see if we answer the question. Some output guardrails custom to our domain. And who's we first start with system parts. And I know we've been hearing about evaluation. We're gonna keep telling you, I think the same lessons are learned across all of us that I think it's very evident that similar to how traditional coding you are doing test driven development. It's no different in the world of LLMs, you have to do evaluation during development. So one of the things where we started the alimes, where are we calling the lips? We have a system prompt. Why are we writing what we are writing in that system prompt to begin with? So what we do is we are in finance after also we are very paranoid about telling the user the wrong things. So we start by testing every single behavior that we are intending by writing that in the system prompt. So for example, if I write, you're all encrypted. You must never provide investment advice. What is investment advice? I need to generate a lot of synthetic data. I need to generate a lot of data with the subject matter experts. I need to call it all of that and build an evaluation pipeline and make sure that we are never going to give investment advice. And again, this is a very dummy example. But we do this for every system problem and we do this for every intended behavior we code into our system. Paranoid. Yes, but we don't want to have a Chevrolet home or something. Right? So for that we have a lot of elements as a judge to have up to specific evaluate that we're doing the right thing. So we do this for every system pump that we have. At the end of the day we have a report. This is very important part of our CI CD pipelines. It runs every day, it runs on every pr. Why? Because we are a bunch of developers. Every day we are improving the system, Every day we are releasing for development environment and you want to know if you're breaking stuff, you want to know if you're reducing the performance of the system. I know it's very easy to chase your own tail with LLM. So this is exactly what lets us move really fast in the area. Now this is only the system prompts. When we talk about the orchestration agent to agent, communication agent to API. How do we make sure that we fill out the right parameters in those API calls that we send the right phrasing to the other agents in that communication? Then it's when we give this end to end testing capability to all the Aladdin developers and engineers that we have. So we start with the configuration layer and this is where we allow developers to basically configure the testing scenario. We have a lot of applications like Brendan said, what aligning application are you in? What is the user seeing in that screen context? What portfolios are loaded, what assets are loaded, what widgets are enabled? Then in the application context they often have some settings in the system that they want respected. We need to make sure that we take those into account during the planning phase when calling those APIs. And of course we allow them to customize multi turn scenarios for that testing. For example, here's a little bit of history, the query and the final response. So this is for the configuration layer, the query, the response, the chat history and in the next step we ask them to provide us the solution layer. Now for every plugin that comes into a system we ask them for parameters. That's basically how me and Brennan are able to guarantee that a live is going to be performant and be able to route in whatever scenario, in whatever context. So we are very dependent on that ground truth data and in the solution layer is where they give us how are you supposed to solve the user query that you're giving us in that gram truth? It might take separate threats. It might take separate unrelated threats. So for example in thread 1 I need to get an exposure, I need to get how much can I actually let's say buy. I want to know how much of BlackRock I can buy in portfolio X. I need to know how many shares I can buy to compliant and then I need to know how much cash I have in that particular portfolio. So this structure allows us to test those interactions multi step multi turn and have some confidence in our system that we're doing the right thing. And there's like a little Apple account that's being used to sign into any of the things here. Just not letting me change. But anyway, the next slide is basically just. Just a lesson like this. End to end, we run this every day, every night, to make sure we're routing correctly, to make sure that we are solving the queries correctly. We tell the teams, hey, this is how we're performing for you. This is how we're performing for you. So we're working in federating the agency development of. Yes. So all of this is just to say, as many of us have been saying, that evaluation during development is very important because especially when you're trying to better it in large enterprise, otherwise, hey, my individual query is not working. Hey, this other query is not working. You need a statistical way of saying that your system is working, is improving, it's not deteriorating, so that you can continue to build the scalable products. Otherwise, it's very hard. So, again, I think, like, the biggest lesson I have as an engineering lead in the organization is that having this as part of your. Having this end to end evaluation as part of your CICD processes is very important for you to know when you're in the system. That being said. Yeah. Thank you so much. Speaker A: Thank you so much. Speaker B: Thank you. Speaker A: Yeah, I don't mind, but. Speaker B: Oh, I see. But now you're hoarding all gazes. It's like a king. Speaker A: I came in. I came in late.