Welcome & Product Keynote

Harrison Chase

YouTube Video

https://www.youtube.com/watch?v=DrygcOI-kG8

Summary

Harrison Chase of LangChain kicks off by introducing the concept of the "agent engineer," a new role requiring a blend of skills in prompting, product development, software engineering, and machine learning. He outlines LangChain's core beliefs for the future of AI agents:

- Agents will rely on many different models: Highlighting the need for model optionality, LangChain serves as an integration layer, allowing developers to choose from a diverse ecosystem of models based on their specific strengths (cost, speed, reasoning).

- Reliable agents start with the right context: Emphasizing the criticality of prompt engineering and context construction, LangGraph is presented as a low-level, unopinionated framework for developers to control agent orchestration.

- Building agents is a team sport: LangSmith is positioned as a collaborative platform for observability, evaluations (evals), and prompt engineering, catering to the multidisciplinary nature of agent development. Chase notes that agents are already gaining significant traction (evidenced by LangSmith trace volumes) and that AI observability is distinct, requiring tools tailored for agent engineers. LangChain is launching new agent-specific metrics in LangSmith, LangGraph pre-builts for common architectures, LangGraph Studio v2 (a web-based visual builder), and an open-source Open Agent Platform for no-code agent creation. Finally, he identifies agent deployment as the next major challenge, introducing the now generally available LangGraph Platform designed for long-running, bursty, and stateful agent workloads.

Auto-Highlights

Auto-Highlights: Highlight: agent engineer, Count: 4, Rank: 0.09 Highlight: LangChain, Count: 7, Rank: 0.09 Highlight: LangGraph, Count: 7, Rank: 0.09 Highlight: LangSmith, Count: 7, Rank: 0.09 Highlight: different models, Count: 3, Rank: 0.08 Highlight: model optionality, Count: 2, Rank: 0.07 Highlight: context engineering, Count: 2, Rank: 0.07 Highlight: observability, Count: 5, Rank: 0.07 Highlight: evals, Count: 4, Rank: 0.06 Highlight: prompt engineering, Count: 3, Rank: 0.06 Highlight: agent deployment, Count: 2, Rank: 0.06 Highlight: LangGraph Platform, Count: 2, Rank: 0.06 Highlight: Open Agent Platform, Count: 1, Rank: 0.05 Highlight: LangGraph Studio v2, Count: 1, Rank: 0.05

Photos

Transcript

Transcript:

(00:00) Guys, welcome to Interrupt. I'm so excited. This is the Yeah, let's go. This is the This is the first time we're we're doing this and and I'm so excited and honored that all of you chose to, you know, take a day to to spend it with us in the amazing lineup of speakers and sponsors that we have here to to hear

(00:27) and and and learn about agents and AI and and everything that's going on. We've we've tried to pull out all the stops. We made that video that was all AI generated. Um, so we've really tried to make it an an incredible experience and and we're really excited for what the day has in store. It it feels

(00:44) incredible that we're doing this. Little over two years ago, we launched Langchain as an open-source project, a nights and weekends thing that was really born out of talking to folks who were building things with AI at events like this. Um and and you know that was a that was a month before chat GBT was

(01:06) launched and after that there was this huge explosion of interest in the space as people wanted to take these ideas that they saw and and and use them on their data in in in their company for their application and and there was clearly something that captured the imagination of folks who were building

(01:25) at the time even if you know the models were way worse than they are today and and and even if there was still a lot of of of work to do. There was that spark of imagination and of interest and and and we saw a lot of people getting started and that's where Lingchain was. It was helping people get started

(01:43) building quickly these prototypes that could amaze and wow. Um and then we saw that there was a lot of struggle when you tried to go to production. Um and I think this has been a story that we we saw relatively quickly but it's continued to be be the trend over the past two years or so. It's it's easy to

(02:00) get something working. That's the magic of of these LLMs. They're they're they're, you know, powerful and and and amazing, but it's hard to get that to something that's reliable enough to actually move the lever in in in real business applications. And that's that's both bad because it's not easy, but

(02:20) that's also good because that means there's a lot of value to be created from everyone from everyone here. and and and and this delta between the ease of prototyping and the difficulty of getting to production is really what led us to turn what was an open- source project into something more than that

(02:41) into a company and and and build a company around it. And this is uh this is a a a slide from the original not with the background obviously but the language. This was from our original pitch deck when we were when we were starting the company in terms of what we wanted to do what the mission was and we

(02:57) want to make intelligent agents ubiquitous. We we think that LLMs are amazing. We think they're super powerful. They can transform the types of applications that we can build. but we think there's a lot of tooling that needs to be built around them to really help us take advantage of of all their capabilities. And so this was the

(03:17) mission that we set out with. And over the past few years of working with with with you all on on on these problems, we we've learned a little bit about what that looks like, but we've also learned that there's a lot left to do. So for a few minutes right now, I want to talk about some of the things that we've

(03:38) learned, but also where where we're headed and what the next things we think are. So what are some of the ingredients of building agents? Let's maybe start there. What goes into them? One core component is obviously prompting. The new things about agents are the LLMs. That's that's why we're all here. And

(03:56) when you interact with LLM, you prompt it. You send it you send it some text. You get back some response. And so being good at prompting is is is a core component of building these agents. We also think that engineering is a core component. So our our our target audience is developers. We think that there is a lot of engineering

(04:16) skills that go into building reliable agents. Whether it's the tools that they're using and interacting with, whether it's the the the patterns that they're using to to do the data pipelines that bring the context to the LLM at the right point in time, whether it's the deployment, there's a lot of engineering that goes into building

(04:33) agents. There's a lot of product sense and product skills as well. So this is similar to the to the product engineer before, but now when we're building these agents, we're often building them to do workflows that a human or a group of humans would do. And so having the product sense and intuition and skill to

(04:51) understand those flows and then try to replicate them with an agent is a really important skill. And finally, there's some aspects of machine learning that are involved. So most prominently with evals, we see this being a great way to test and and and measure these agents and capture the the non-determinism with

(05:11) with some metrics over time. And there's other things like fine-tuning as well. And so there's absolutely some aspect of machine learning here. And the the combination of all of these skills has really burged into what we see being the agent engineer. And so this combines different aspects of all of these. And

(05:30) it's early on so this is still being defined how much and and and which of these areas are important but we see this new profile of builder which combines all of these representing the the the agent engineer. And so when we think about LangChain and the mission of the company in order to make these

(05:46) intelligent agents ubiquitous we want to support all the agent engineers out there. And I think we're we're we're a room full of agent engineers here or at least people who who are who are trying to be who are moving in that direction. And so this is how we think of the personas that we're trying to speak to

(06:05) and empower. And so when we think about that, I think one question that we ask ourselves is what will the agents of the future look like? We want to see what those agents look like and then build tools to help build those agents. That's that's what's interesting to us. That's why we're all here. Um and and and so as

(06:22) we think about what these agents look like, there are a few beliefs that we have. And so I want to walk through three of them which we think are more kind of like in the present now and then three of them which we think are are in the future. So the first belief that we have is that agents will rely on many

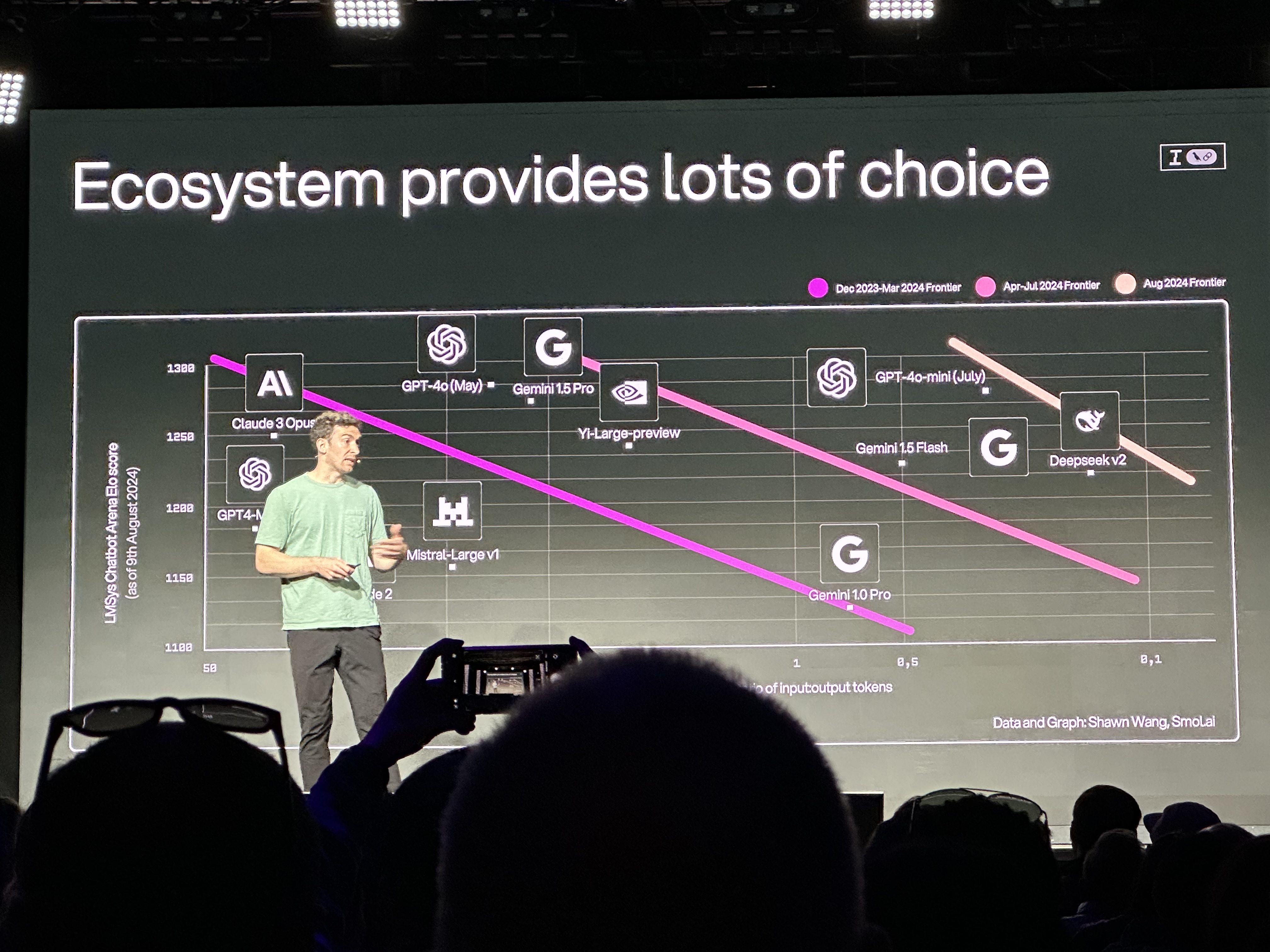

(06:40) different models. So what does that mean? So over the past year and and and last few months uh specifically there have been a lot of different models coming on to the playing field. They have different strengths and weaknesses. Some of them are really costly but they can reason for a long time. Some of them are faster

(07:00) and they're better for specific tasks. Some of them are great at reasoning. Some of them are great at writing. And so there's this whole ecosystem of models out there giving developers the the the choice to choose which model is best for them at a particular point in their agent. So an agent might use many

(07:19) different models and we see that being an increasingly common thing. And this is really where we've turned the original LangChain package into. So there was a lot in the original ling chain. A lot of it was aimed at making it easy to get started. A big part of that was the integrations and that's really what we've focused and

(07:38) doubled down on in the past year or so. We've turned LangChain into a place for integrations of all types but specifically for language models as we've seen this be the key component of building these applications and it's provided a a stable ecosystem for interacting with all the different model providers and

(07:58) model options that are out there. As we've really focused in on this being the core use case for LangChain, we've seen that the stability and focus that this has has caused LangChain to continue to grow. And and you know, this speaks to the model optionality that developers want as well. And so in the last month alone, we

(08:24) uh LangChain did 70 million monthly downloads. That's in the last month alone, not aggregate. And we see this this this this trend where developers increasingly want the flexibility to switch between models. And I I I think this is interesting to not look at by alone, but but also if you compare to to

(08:44) to a benchmark, which we all have in our mind when we think of Gen AI, which is Open AI, you know, they're the they're what people think of. And if you look at their kind of like download stats over the last few months, you'll actually notice that LangChain, again driven by the different model options that are out

(09:00) there, has actually become more popular in terms of Python downloads than the OpenAI SDK. And this speaks to the fact that developers want model optionality and they're choosing LangChain to get it. The second belief that we have is that reliable agents start with the right context. So what does this mean?

(09:21) So prompting is really important. The prompt that you construct to pass into the LLM will determine what comes out of the LM that will determine the behavior of the agent. This prompt isn't just one big string. It's actually made up of a bunch of different parts. And all these parts come from different places. So

(09:36) they could come from a system message. They could come from user input. They could come from tools. They could come from retrieval steps. They could come from the the conversation history. And so when you construct this context that you're passing into the LLM, it's really really important to be able to control

(09:53) exactly what goes in there because that will affect what comes out. And so in order to provide this control and flexibility in this in this context engineering, we've started moving all of our agent orchestration over to LangGraph. So we launched LangGraph a little over a year ago. It's an extremely low-level unopinionated

(10:14) framework for building agents. There's no hidden prompts. There's no hidden cognitive architectures. You can create the the the the flow of the agent that you want. So, you can do all the necessary steps to get the right context and then you can pass that in whatever form to the LLM. And so, you have

(10:33) supreme control over kind of like all of it. And this this controllability to build the cognitive architecture that you want is a key selling point of of of of LangGraph as the agent orchestration framework. And of course on top of this control we've tried to add in functionality that doesn't get in the

(10:53) way of adding the right context. So streaming human in the loop support short-term memory and long-term memory. don't tell you how to use these things, but you we give you low-level primitives so that you can build it into the agent yourself. And so this focus on controllability has really helped Lingraph stand out and we're

(11:11) recommending that all complex agent orchestration things be built on top of it. Now the last belief that we have is that building agents is a team sport. So the two things I talked about so far, laying graph and LangChain are both aimed at developers. They're they're they're tools for for for engineers. But

(11:33) we think there are all these different areas prompting product machine learning that are involved in building agents. And yes, ideally one person, the agent engineer, would have all of these assets, but it's early on. We're still figuring out what these means. And so building agents is becoming a team

(11:49) sport. And the way that we're helping with that or trying to help with that is LangSmith. So LangSmith, we think of observability, evaluability that it provides as a really integral way for everyone, but especially product people to see what's going on inside the agent. So you can see all the steps that are happening,

(12:21) you can see the inputs and outputs. And so if you're trying to replicate a human workflow that you understand, this provides the best kind of like pane of glass into what's happening. I mentioned eval being important, this is where the machine learning knowledge comes into play. And so we try to make it

(12:36) incredibly easy to build data sets and run evals both offline and online in LangSmith and provide that team functionality there. And finally, prompting. Prompting is a key part of building agents. We have a prompt hub. We have a prompt playground. The reason that all of these are in the same platform, LangSmith, is because we think

(12:54) agent building agents is a team sport and there needs to be this platform for all these people of different backgrounds and strengths to collaborate on agents in one place. And so that's where we are today. And I want to emphasize that agents are here. We're we're going to be hearing later on from a bunch of folks who have

(13:18) built agents and so you'll you'll hear about that. But I also think that they're they've been around for a little bit. You know, people are saying 2025 is the year of agents. I think 2024 is really when we started to see a lot of these come online and so agents are definitely here today. We have a number

(13:34) of these companies speaking about how they're building agents. We'll talk with Replet next and codegen has obviously been the the the the biggest space that's been transformed but we've seen a lot in customer support in AI search co-pilots and so agents are here they're possible to build it and hopefully today hearing the

(13:55) stories of a lot of people who are building we'll we'll will'll show that the thing that is happening that we see happening this year is agents that were built last year are starting to get more and more traction larger scales that you traction that we've had in terms of traces coming in. So you can see that at

(14:22) the start of this year there's kind of been this influx in just trace volume and this speaks to the fact that agents not only are here but they're being used consistently and providing value to folks. So those are three beliefs that we've built up over the past few years about what it's like to build agents now. So

(14:42) where do we think the industry is headed? What are some beliefs we have about the future? As we see more and more agents going into production, one of the things that we're starting to believe more strongly is that AI observability is different than tra than traditional observability. So what I mean by that is when you're

(15:04) dealing with agents, you're getting all of these large unstructured often multimodal payloads that are coming in to a platform. And those are some technical differences from traditional observability. But also what's different is the user persona that the observability logs are being used for. They're they're they're not built for an

(15:23) S sur. They're built for this agent engineer persona. And that needs to bring in some of these ML concepts, some of that product concepts, some of that prompt engineering context and provide this different type of AI observability. And we've always had AI observability in LangSmith from traditional metrics to business metrics

(15:44) to more qualitative metrics. And today we're excited to launch a new series of metrics around agents. So specifically, we're launching better insight into the tools that your agents are using. So you can track the run counts of tools, the latencies, the errors. And then we're also launching trajectory observability so you can see

(16:07) which paths your agents are taking and again the latency and errors associated with that. And so this is available today in LangSmith. If you go and send a bunch of traces, you can start to see this populate. The next belief we have is that everyone will build be an agent builder. So when we talk about this

(16:30) agent engineer, it it combines these four different aspects. And realistically right now it's so early on that no one really is at the center of all this and and has all of these skills. And so yes, we want to make it possible for people to collaborate and build agents as a team sport. and this is Linkmith. But we also want to try to

(16:50) move folks who are maybe in one of these quadrants in a traditional engineering background or or in a product background or in an ML background, move them more towards the center so that they can build agents. So what does that mean? So if if we think about developers who don't have a background in AI and aren't familiar

(17:09) with this, how can we enable them to build agents more easily? The thing that we've been building towards this, we've launched a few things over the past few months in this is what we're calling LangGraph pre-builts. So these are common agent architectures for the variety of different agent types that we see out

(17:28) there. So single agents, agent swarms, supervisor agents, there's some other ones as well. We want to make it really easy for anyone who doesn't understand agents or is coming to it from an engineering background to easily get started with these common architectures. At the next level, we want to make it possible for people who

(17:48) are on these product engineering teams but maybe not developers themselves to be more involved in building agents. So, one of the coolest things that we launched maybe a year ago at this point is LangGraph Studio. And so today we're excited to give it a facelift. We're launching LangGraph Studio V2. Uh no more

(18:06) desktop app so you can run it if if if you're not on Mac anymore. Um and it comes with a bunch of improvements as well. So you can see all the LM calls in a playground directly in the studio. You can build up data sets here. You can you can modify prompts as well. So you can start to modify the agent and and I

(18:26) think most excitingly is you can pull down production traces from LangSmith into your local LangGraph studio so that you can start to modify the agent. It will then hot reload and then you can you can try to fix these production issues that you're seeing. And then finally we want to make it possible for more and more people who

(18:45) aren't developers at all to build agents from scratch, not just on product engineering teams. And so when we think about folks at larger enterprises, there are often a number of tasks that they want to do and build agents for and and and it's tough to get kind of like engineering resources to start. And so

(19:02) we want to make it more and more easy for folks to build agents in uh a noode way. And so today we're launching open source open agent platform. It's powered by LangGraph platform. It uses agent templates to allow people to build agents in a noode way. It comes with a tool server that uses MCP. It comes with

(19:23) Rag as a service so you can easily get started with anything related to Rag and it contains an agent registry so that you can see all the different agents that you've created. And so this is open source. You can check it out today. And finally, the last belief we have is that deployment of agents is the

(19:42) next hurdle. So, it's possible to build agents. We we've we've we've talked about what it looks like. Once you build an agent, you then need to deploy it. And and and and sometimes this can be easy. Sometimes you can stand up kind of like a traditional kind of like web server and put it behind it. But we see more and

(19:58) more that agents are looking a little bit different than traditional web apps. So, specifically, they're often long running. We see agents, deep research is a great example, that takes 10 minutes to run. We see agents that are taking an hour or 12 hours to run. They're often bursty in nature. So, especially if you

(20:16) kick them off as background jobs, you might be kicking them off hundreds or thousands at a time and they're flaky. They're flaky in a bunch of different ways. The, you know, the calls to the LLM might fail, but also you want to have human in the loop because these LLMs might not do what you expect. And so, you need some

(20:33) statefulness in these agents to allow this human in the loop or human on the loop interaction patterns. And so we we've seen these patterns crop up. We think that agents are going to become more and more longunning, more and more bursty, and more and more stateful. And so we want to help people tackle this deployment challenge. And so

(20:54) LangGraph platform we launched in beta about a year ago and today we're excited to announce that it's officially generally available. So what's in LangGraph platform? If you haven't checked it out, there are 30 different API endpoints that we stand up for everything from streaming to human in the loop to

(21:12) memory. It scales horizontally so it can handle burstiness. It's designed for these longunning workloads and you can actually expose the agents that you deploy here as MCP servers. It also comes with a control plane where you can discover the agents that everyone at your or has deployed. You can share agents. You can reuse

(21:30) these agents with templates. And then there are a few different deployment options. So we have a cloud SAS offering as well as hybrid and then fully self-hosted options. So you can try this out if you go to LangSmith today. And if you're interested in any of the hybrid or fully self-hosted, feel free to get in touch.

(21:50) That wraps it up for the keynote and before we go on to the next section, I want to thank a bunch of people because this is a fantastic event and I'm really grateful for a number of people who have made this possible. So Cisco customer experience is our presenting sponsor. You'll hear from them later on. They've

(22:12) done a fantastic job at building some really complex agents that are transforming how they do work. And I'm excited for you all to hear more about that. We have a bunch of incredible sponsors. So there I I saw there was a lot of lines at the booth out there and and I think that's in part because we have

(22:32) some of the the most interesting agent companies in in in the world sponsoring this event. And so I'd highly encourage everyone to go check them out and talk to them. I think, you know, we have some ideas of what the future will look like, but so do the people here. And so I'd encourage everyone to go check them out

(22:50) and and and talk to them later on. And then finally, I want to thank all of the speakers. We have an incredible lineup. I think everyone here is going to learn a ton. We've tried really, really hard to bring together a collection of folks who have actually built agents and put them in production so that you can hear from them and learn

(23:10) from them what that looks like. And so I'm really excited to hear a lot of these talks and I hope you all are as well.